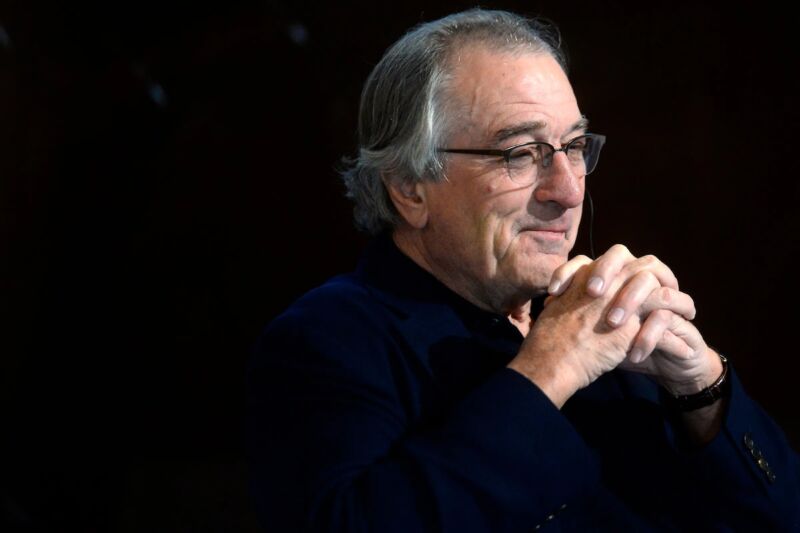

Sprichst du mit mir? —

Technology related to deepfakes helps match facial movements to dialogue.

Will Knight, wired.com

–

Paul Bednyakov | Getty Images

You talkin’ to me… in German?

New deepfake technology allows Robert De Niro to deliver his famous line from Taxi Driverin flawless German—with realistic lip movements and facial expressions. The AI software manipulates an actor’s lips and facial expressions to make them convincingly match the speech of someone speaking the same lines in a different language. The artificial intelligence-based tech could reshape the movie industry, in both alluring and troubling ways.

The technology is related to deepfaking, which uses AI to paste one person’s face onto someone else. It promises to allow directors to effectively reshoot movies in different languages, making foreign versions less jarring for audiences and more faithful to the original. But the power to automatically alter an actor’s face so easily might also prove controversial if not used carefully.

The AI dubbing technology was developed by Flawless, a UK company cofounded by the director Scott Mann, who says he became tired of seeing poor foreign dubbing in his films.

After watching a foreign version of his then-most recent movie, Heist, which stars De Niro, Mann says he was appalled by how the dubbing ruined carefully crafted scenes. (He declines to specify the language.) Dubbing sometimes involves changing dialog significantly, Mann says, in an effort to make it more closely match an actor’s lip movements. “I remember just being devastated,” he says. “You make a small change in a word or a performance, it can have a large change on a character in the story beat, and in turn on the film.”

Mann began researching academic work related to deepfakes, which led him to a project involving AI dubbing led by Christian Theobalt, a professor at the Max Planck Institute for Informatics in Germany. The work is more sophisticated than a conventional deepfake. It involves capturing the facial expressions and movements of an actor in a scene as well as someone speaking the same lines in another language. This information is then combined to create a 3D model that merges the actor’s face and head with the lip movements of the dubber. Finally, the result is digitally stitched onto the actor in a scene.

Flawless drew inspiration from Theobalt’s project for its product. Mann says the company is in discussions with studios about creating foreign versions of several movies. Other demo clips from Flawless show Jack Nicholson and Tom Cruise delivering famous lines from A Few Good Men in French and Tom Hanks speaking Spanish and Japanese in scenes from Forrest Gump.

“It’s going to be invisible pretty soon,” says Mann. “People will be watching something and they won’t realize it was originally shot in French or whatever. “

Mann says Flawless is also looking at how its technology could help studios avoid costly reshoots by having an actor appear to say new lines. But he says some actors are a little unsettled when they see themselves manipulated using AI. “There’s a fear and wow—they’re the two reactions I keep getting,” he says.

Virginia Gardner, an actress who stars in Mann’s latest movie and has seen herself speaking Spanish thanks to Flawless’ software, doesn’t seem too worried, although she assumes that films modified with AI would include a disclaimer. “I think this is the best way to be able as an actor to preserve your performance” in another language, she says. “If you’re trusting your director and you’re trusting that this process is only going to make a film better, then I really don’t see a downside to it.”

AI video manipulation is controversial—and for good reason. Free deepfake programs that can seamlessly swap one person’s face onto someone else in a video scene have proliferated with advances in AI. The software identifies key points on a person’s face and uses machine learning to capture how that person’s face moves.

The technology has been used to create fake celebrity porn and damaging revenge-porn clips targeting women. Experts worry that deepfakes showing a famous person in a compromising situation might spread misinformation and sway an election.

Off-the-shelf face manipulation might prove controversial in the movie industry. Darryl Marks, founder of Adapt Entertainment, a company in Tel Aviv that is working on another AI dubbing tool, says he isn’t sure how some actors might react to seeing their performance altered, especially if it isn’t clear that it’s been done by a computer. “If there’s a very famous actor, they might block it,” he says.

“There are legitimate and ethical uses of this technology,” says Duncan Crabtree-Ireland, general counsel of the Screen Actors Guild. “But any use of such technology must be done only with the consent of the performers involved, and with proper and appropriate compensation.”

Hao Li, a visual effects artist who specializes in using AI for facial manipulation, says movie directors and producers show growing interest in deepfakes and related AI technology. Creating a deepfake normally requires hours of algorithmic processing, but Li is working on a movie where more advanced deepfake software lets a director see an actor transformed in real time.

Li says the situation feels similar to when the use of photo-realistic computer graphics became widely available in the 2000s. Now, thanks to AI, “suddenly everyone wants to do something,” he says.

This story originally appeared on wired.com.